Woman Shows How Quickly TikTok’s Algorithm Can Radicalize Users With Far-Right Content

TikTok needs to do better.

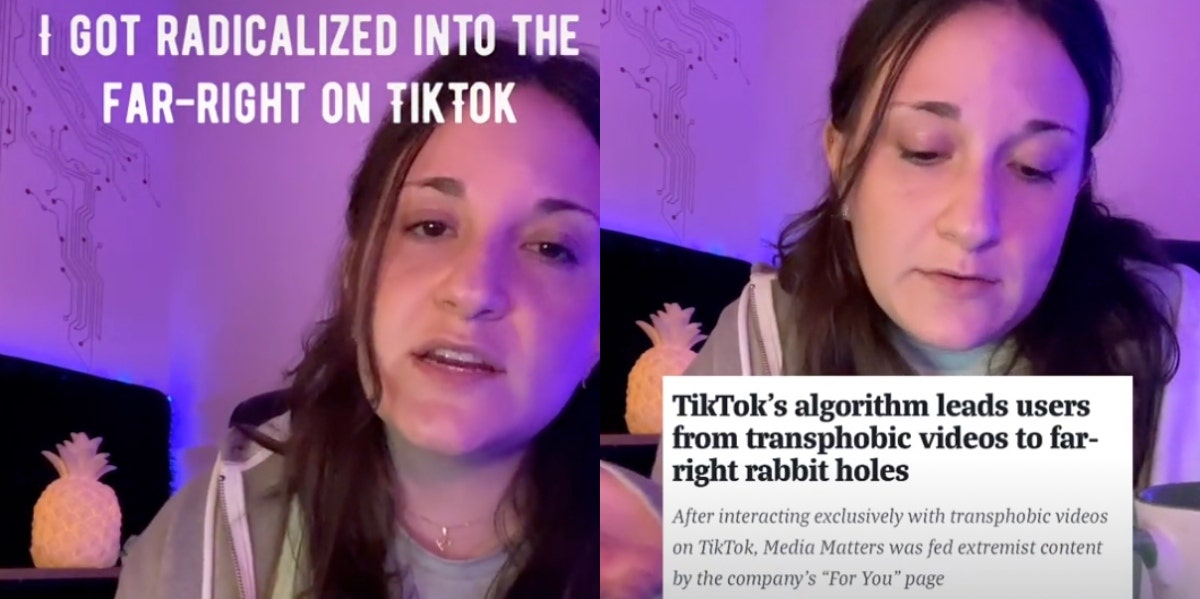

Abbie Richards / TikTok

Abbie Richards / TikTok TikTok user, Abbie Richards, conducted a study that showed how easy it could be for someone to become radicalized on TikTok from interacting with exclusively transphobic content.

Richards shared her findings in a TikTok video on her page, saying that she created another TikTok account that only engaged with content that was transphobic.

“We wanted to examine whether or not transphobia is a gateway prejudice that leads to broader far-right radicalization,” Richards explained in her video. “We wanted to see if being transphobic alone was enough to lead you to the far-right.”

Richards' TikTok series explored how transphobia leads to other hateful movements.

Richards made a brand new TikTok account and followed fourteen creators who were known to post transphobic content.

It included accounts that degraded trans people, claimed there was only such a thing as “two genders,” and mocked the trans experience.

Richards only interacted with transphobic content that showed up on her TikTok “For You” Page, but despite only interacting with that kind of content, she found that her entire FYP was becoming populated with videos promoting far-right views and opinions.

Richards along with a few other researchers working on this study, coded the first 400 videos that were fed to their FYP.

Much of the far-right content that they were watching did include additional transphobic content, despite the knowledge that such content was in violation of TikTok’s community guidelines.

The community guidelines on TikTok clearly state that the platform does not allow “content that attacks, threatens, incites violence against, or otherwise dehumanizes an individual or group" on the basis of attributes including gender and gender identity.

Some of Richards key findings were after she interacted with anti-trans content on TikTok, her recommended algorithm began showing her more transphobic and homophobic videos, along with other far-right, violent, and hate-filled videos.

Transphobic TikTok content lead to other far-right opinions.

Interacting with only anti-trans content prompted TikTok to show Richards other videos with misogynistic content, racist and white supremacist ideologies, anti-vaccine content, antisemitic content, ableist narratvies, conspiracy theories, hate symbols, and videos with themes of general violence.

Richards, along with the other researchers, found that out of the 360 total recommended videos included in their analysis, 103 contained anti-trans and/or homophobic narratives, 42 were misogynistic, 29 contained racist narratives or white supremacist messaging, and 14 endorsed violence.

TikTok is a short-form video app so Richards estimates that the app was delivering these extreme videos after just 2 hours of continuous scrolling.

It was essentially proven that only interacting with transphobic content on TikTok caused the algorithm to recognize the user as someone with extremist opinions, therefore showing them more far-right content on the app.

This isn’t the first time that TikTok’s algorithm and it’s community guidelines have been criticized for allowing certain videos to play on it’s platform.

A recent study conducted by The Wall Street Journal found that TikTok shows its young users inappropriate adult content, including videos depicting drug use, and content promoting pornograhic sites.

The Wall Street Journal created accounts run by dozens of automated bots that registered between the ages of 13 to 15.

An analysis of the videos shown to these accounts found that through its algorithm, TikTok can drive its young users into endless content about sex and drugs.

Some of the videos that were reportedly shown to these accounts didn’t always consist of inappropriate content but also videos about eating disorders, and excessive usage of alcohol, including content showing drinking and driving.

It seems that TikTok needs to take a look into the types of videos that are seemingly against community guidelines but still exist on the app for viewing.

Nia Tipton is a writer living in Brooklyn. She covers pop culture, social justice issues, and trending topics. Follow her on Instagram.